Data Center Innovation – how measurement accuracy enables energy efficiency

In the following article, Keith Dunnavant from Munters and Anu Kätkä from Vaisala will describe recent trends in the data center sector, and discuss the impact of HVAC measurements on energy efficiency. With spiraling energy costs and governments urgently seeking greenhouse gas emissions reduction opportunities, data center energy efficiency is the focus of international scrutiny.

Both of the authors have long-standing experience and expertise in energy management at data centers. Munters is a global leader in energy efficient and sustainable climate control solutions for mission-critical processes including data centers, and Vaisala is a global leader in weather, environmental, and industrial measurements.

Data center energy usage

‘Power usage effectiveness’ (PUE) as the measure

The global demand for electricity is about 20,000 terawatt hours; the ICT (Information and Communications Technology) sector uses 2,000 terawatt hours and data centers use approximately 200 terawatt hours, which is 1 percent of the total. Data centers therefore represent a significant part of most country’s energy consumption. It has been estimated that there are over 18 million servers in data centers globally. In addition to their own power requirements, these IT devices also require supporting infrastructure such as cooling, power distribution, fire suppression, uninterruptable power supplies, generators etc.

In order to compare the energy efficiency in data centers, it is common practice to use ‘power usage effectiveness’ (PUE) as the measure. This is defined as the ratio of total energy used in a data center to the energy used by IT only. Optimally the PUE would be 1, which would mean that all energy is spent on IT, and the supporting infrastructure is not consuming any energy.

So, to minimize the PUE, the objective is to reduce the consumption of the supporting infrastructure such as cooling and power distribution. The typical PUE in traditional legacy data centers is around 2, whereas big hyperscale data centers can reach below 1.2. The global average was approximately 1.67 in 2020. This means that, on average, 40 percent of total energy use is non-IT consumption. However, PUE is a ratio, so it does not tell us anything about the total amount of energy consumption, which means that if the IT devices are consuming a high level of energy in comparison with the cooling system, the PUE will look good. It is therefore important to also measure total power consumption, as well as the efficiency and the lifecycle of the IT equipment. Additionally, from an environmental perspective, consideration should be given to the way in which the electricity is produced, how much water is being consumed (both in generating the electricity and at the site for cooling), and whether waste heat is being utilized.

The PUE concept was originally developed by the Green Grid in 2006 and published as an ISO standard in 2016. The Green Grid is an open industry consortium of data center operators, cloud providers, technology and equipment suppliers, facility architects, and end-users, working globally in the energy and resource efficiency of data center ecosystems striving to minimize carbon emissions.

PUE remains the most common method for calculating data center energy efficiency. At Munters, for example, PUE is evaluated at both a peak and annualized basis for each project. When computing PUE metrics, only the IT load and cooling load are considered in the calculation of PUE. This is referred to as either partial PUE (pPUE) or mechanical PUE (PUEM). The peak pPUE is used by electrical engineers to establish the maximum loads and to size back-up generators. The annualized pPUE is used to evaluate, and compare with other cooling options, how much electricity will be consumed during a typical year. While PUE may not be a perfect tool, it is increasingly being supported by other measures such as WUE (water usage effectiveness), CUE (carbon usage effectiveness), as well as approaches that can enhance the relevance of PUE, including SPUE (Server PUE), and TUE (Total PUE).

Data center trends

In the past decade efficient hyperscale data centers have increased their relative share of total data center energy consumption, while many of the less efficient, traditional data centers have been shut down. Consequently, total energy consumption has not yet increased dramatically. These newly built hyperscale data centers have been designed for efficiency. However, we know that there will be a growing demand for information services and computer-intensive applications, due to many emerging trends like AI, machine learning, automation, driverless vehicles and so on. Consequently, the energy demand from data centers is expected to increase, and the level of increase is the subject of debate. According to the best-case scenario, in comparison with current demand, global data center energy consumption will increase threefold by 2030, but an increase of eightfold is believed more likely. These energy consumption projections include both IT and non-IT infrastructure. The majority of non-IT energy consumption is from cooling, or more precisely, rejecting the heat from the servers, and the cooling cost alone can easily represent up to 25 percent or more of total annual energy costs. Cooling is of course a necessity for maintaining IT functionality, and this can be optimized by good design and by the effective operation of building systems.

An important recent trend is an increase in server rack power density, with some as high as 30 to 40 kilowatts and above. According to research conducted by AFCOM, the industry association for data center professionals, the 2020 State of the Data Center report found that average rack density jumped to 8.2 kW per rack; up from 7.3 kW in 2019 and 7.2 kW in 2018. About 68 percent of respondents reported that rack density has increased over the previous three years.

The shift towards cloud computing is certainly boosting the development of hyperscale and co-location data centers. Historically, a 1-megawatt data center would have been designed to meet the needs of a bank or an airline or a university, but many of these institutions and companies are now shifting to cloud services within hyperscaler and co-location data center facilities. As a result of this growing demand, there is an increased requirement for data speed, and of course all of these data centers are serving mission-critical applications, so the reliability of the infrastructure is very important.

There is also an increased focus on edge data centers to reduce latency (delay), as well as towards the adoption of liquid cooling to accommodate high performance chips and to reduce energy use.

Temperature & humidity control

One of the primary considerations for energy efficiency in air-cooled data center cooling is hot aisle/ cold aisle containment. Sadly, containment is still poorly managed in many legacy data centers, leading to low energy efficiency. New data center builds, on the other hand, tend to take containment very seriously, which contributes significantly to the performance.

For many, supply air temperatures are optimally between 24 °C and 25.5 °C (75.2 °F and 77.9 °F). However, delta-T is very important - the temperature differential between the hot aisle and the cold aisle. Typically, delta-T is around 10 to 12 °C (18 to 21.6 °F), but 14 °C (25.2 °F) is a common objective in data center design. Increasing delta-T results in dual benefit of reducing the fan motor energy use required by the cooling systems, as well as increasing the potential for economizing heat rejection strategies.

Economization is the process by which outdoor air can be utilized to facilitate a portion of data center heat rejection. Economization can occur directly, where outdoor air is actually brought into the cooling systems and delivered to the servers (after appropriate air filtration), or indirectly, where recirculating data center air is rejected to ambient by way of an air-to-air heat exchanger. This lowers costs and improves efficiency and sustainability. However, to maintain efficiency, air side pressure drops, due to filtration, should be minimized. So, if air is recirculated within the data center, without the introduction of outside air, it should be possible to reduce or eliminate the need for filtration altogether.

Cooling and ventilation require careful control, and it is important to deploy high efficiency fans, maintain a slight positive building pressure, and to control room humidity. For example, make-up air systems should control space dew point sufficiently low such that cooling coils only undertake sensible cooling, without having to tackle a latent load (removal of moisture from the air).

The overall objective of the heat rejection system is to maintain optimal conditions for the IT equipment, whilst minimizing energy usage. Low humidity, for example, can increase the risk of static electricity, and high humidity can cause condensation, which is a threat to electrical and metallic equipment; increasing the risk of failure and reducing the working lifetime. High humidity levels combined with various ambient pollutants have been shown to accelerate corrosion of various components within servers.

Cooling is essential to remove the heat generated by IT equipment; to avoid over-heating and prevent failures. According to some studies, a rapidly fluctuating temperature can actually be more harmful for the IT devices than a stable higher temperature, so the control loop is important from that perspective.

The latest IT equipment is typically able to operate at higher temperatures, which means that the intake temperature can be raised and the potential for free cooling and economization is improved. Outdoor air can be utilized to cool indoor air either directly or indirectly (as noted above), and evaporative or adiabatic cooling can further improve the efficiency of economization. These energy saving technologies have been extensively deployed, with the trend being towards dry heat rejection strategies that consume no water. As the temperature of the heat extraction medium (air or liquid) rises, the potential for waste heat from data centers to be effectively used increases, allowing for example, its use in district heating networks. In Helsinki, for example, Microsoft and the energy group Fortum are collaborating on a project to capture excess heat. The data center will use 100 per cent emission-free electricity, and Fortum will transfer the clean heat from the server cooling process to homes, services and business premises that are connected to its district heating system. This data center waste heat recycling facility is likely to be the largest of its kind in the world.

The importance of accurate monitoring

In many modern facilities 99.999% uptime is expected; representing annual downtime of just a few minutes per year. These extremely high levels of performance are necessary because of the importance and value of the data and processes being handled by the IT infrastructure.

A key feature in data center design is the delivery of the correct temperature to servers, and this can only be achieved if the control system is able to rely on accurate sensors. Larger data halls can be more challenging to monitor because they have a greater potential for spatial temperature variability, so it is important that there are sufficient numbers of temperature sensors to ensure that all servers are monitored.

Some servers may be close to a cooling unit and others may be further away; some may be at the bottom of a rack, and others higher up, so there is potential for three-dimensional variability. In addition to a sufficient number of sensors, it is also therefore important for air flow and cooling to be optimally distributed throughout the server room. By a combination of proper design and monitoring, it is possible to efficiently control cooling and air flow to meet the required specification.

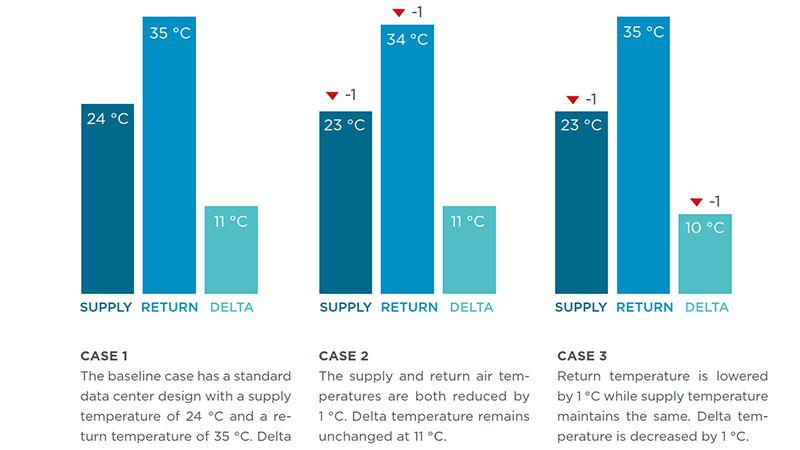

In order to evaluate the effects of different variables on average annual energy usage, Munters has modelled their system operating with three different control regimes at three different locations; each with a 1-megawatt ITE load data center:

- The base case scenario has a design supply temperature of 24 °C (75.2 °F), and a return temperature of 35 °C (95 °F) (delta-T = 11 °C, 19.8 °F).

- In the second case, the supply and return temperatures were lowered by 1 °C, 1.8 °F (delta-T maintained).

- In the third case, just the return temperature was lowered by 1 °C, 1.8 °F (delta-T reduced).

The results showed lower energy usage in all three scenarios at the milder climate location. Scenario #2 showed 1 to 2 percent additional energy usage by lowering the supply and return temperatures by just one degree. Scenario #3 showed the most significant increase in energy usage; by lowering the return temperature by just 1 °C (and thereby the delta-T), energy usage increased by 8 to 9% in all three locations. This large effect from a small deviation in temperature highlights the importance of both delta-T and sensor accuracy.

Whatever cooling methods are employed, it is crucially important to control HVAC processes and indoor conditions in a reliable manner. To achieve this, data center managers need to be able to depend on continuous accurate measurements, because the control loop can only be as good as the measurements. For this reason, high quality sensors are enablers of efficiently controlled HVAC processes and a stable indoor environment. However, sensor specification at the time of installation is not necessarily an indicator of long-term performance reliability. The real value of a sensor is derived over its entire lifecycle, because frequent requirements for maintenance can be costly, and as outlined in the Munters models, even small deviations in accuracy can lead to inflated energy costs.

In most cases, the value of the data in the IT infrastructure is extremely high, and often mission-critical, so it would make no sense to deploy low-cost sensors if that results in high maintenance costs, or risks to high-value data. Users should therefore seek durable measurement devices that are able to deliver reliable, stable readings in the long run, because it is that lifelong reliability that really matters.

Measurement technology for demanding environments

A focus on the accuracy, reliability and stability of measurement devices has been a key brand value for Vaisala since its foundation over 86 years ago. These features are therefore fundamental components of the design remit for every Vaisala product. Demonstrating the advantages of these features, Vaisala sensors have been operating on the planet Mars for over eight years, delivering problem-free data in harsh conditions aboard NASA’s Curiosity rover, and more recently on the Perseverance rover.

Data centers represent a less challenging environment than outer space, but reliable sensors are equally important given the essential service that data centers provide to businesses, economies and societies world-wide.

Factors affecting the choice of sensors

1. Reliability

The accuracy of the sensor at the point of installation is obviously important, but it is vital that the sensor remains accurate in the long-term delivering stable readings. Given the high value of data centers, and frequently their remote location, the lifetime of the transmitters should be well above the norm. The manufacturer should therefore have experience in the sector, coupled with a reputation for reliable measurement in critical environments. Traceable calibration certificates provide assurance that sensors were performing correctly before leaving the factory, and proven reliability means that this level of accuracy can be maintained in the long-term.

2. Maintenance

Sensors with a high maintenance requirement should be avoided; not just because of the costs involved, but also because such sensors incur higher risks of failure. In addition, sensors that drift or lose accuracy can result in enormous energy costs, as explained above. The high levels of uptime required by data centers means that any maintenance operations for monitoring equipment should not disturb the operation of the data center. Consequently, instruments such as Vaisala’s with exchangeable measurement probes or modules are advantageous; not least because they allow sensors to be removed and calibrated off-line. Importantly, if a measurement probe or module is exchanged, the calibration certificate should also be updated. Ideally, it should be possible to undertake maintenance operations on-site with tools from the instrument provider, and this work should be undertaken as part of a scheduled maintenance program.

3. Sustainability

From a sensor perspective, the latest technologies allow users to upgrade just the measurement part of a sensor instead of changing or scrapping the whole transmitter; thereby helping to avoid unnecessary waste. The environmental and sustainability credentials of suppliers should be taken into consideration when making buying decisions. This enables sustainability to cascade down supply chains and creates a driver for all businesses, large or small. Sustainability is at the heart of both Munters and Vaisala. Munters, for example, has over 1.5 gigawatts of data center cooling equipment installed globally: delivering energy savings equivalent to two percent of Sweden’s annual energy consumption. Vaisala was recently listed in the Financial Times’ top 5 European Climate Leaders 2022. The list includes European companies that achieved the greatest reduction in their greenhouse gas emissions between 2015 and 2020.

Summary

With critical data worth billions of dollars being processed and stored at data centers, power-hungry servers must be maintained in ideal temperature and humidity conditions to prevent downtime. At the same time, there are urgent demands for lower greenhouse gas emissions, improved energy efficiency, lower energy costs and better PUE measures; all at a time of spiraling energy costs. This ‘perfect storm’ of drivers means that the accurate control and optimization of HVAC processes is extremely important.

About the writers

Keith Dunnavant is VP Sales at Munters and in charge of their data center business in Americas. Anu Kätkä is Product Manager at Vaisala and in charge of Vaisala's global HVAC and data center product area.